Chaos Engineering is an emerging discipline that aims to test the solidity of the socio-technical infrastructure in order to always better preserve the quality of service. This practice experiments with gaps and weaknesses in applications and infrastructure on a distributed system. Complexification and automation of systems make this practice more and more important to maintain the user experience. Experienced for seven years by pure-players like Netflix, it is structured around dedicated processes and tools.

The question posed by this discipline is: How close is your system to the precipice and can sink into chaos?

At any moment, something, somewhere, breaks down. It is no longer a matter of crossing your fingers so that it does not happen, but of building the resilience necessary in our systems to tolerate failures, and of ensuring that this resilience is operational and reliable in production.

And yet, we test!

We have all implemented all the tests necessary for the quality of our applications, and to guarantee compliance with operability standards, to achieve the required level of confidence for the increasingly frequent deployments of our developments.

However, one of the most critical issues in testing practices is the representativeness of non-production environments.

We all face a harsh reality: it is almost impossible and costly to keep up to date.

The deployment of Agile & DevOps approaches is making the subject more complicated by making it possible to deliver more and more frequently, up to several times a day.

Besides, non-production environments are and will be less and less representative when you are in the Cloud, and your infrastructure adapts to your traffic by auto-scaling.

The increasingly advanced customization of our applications – therefore, with data-based behaviors – confronts us with customer journeys that adapt in real-time.

And it’s going to become even worse with the deployment of Artificial Intelligence.

It, therefore, becomes necessary to experiment in production in order to have the right level of confidence in our systems.

Yes, you read that right, we are talking about doing production tests:

- Experiment to test our systems: rather than waiting for a failure, we want to introduce one to test the resilience of the system,

- Experiment to learn: we don’t want to generate chaos for fun, but rather to discover unknown weaknesses in our systems.

It is, however, about experimenting in production on a stable and efficient system.

It is not a game, but real life, with potentially significant human and financial impacts.

The Chaos engineer is not a mad scientist, he is an explorer looking for knowledge of the system he is studying.

What is an experiment?

In the book presented at the end of the article, the Chaos engineers at Netflix Technology Blog proposed this protocol:

- Define what is the question that we want to ask the system: do we want to test the resilience of a component, an application, an organization?

- Define the scope of the experience: is it all or part of the production? is it only the technical environment alone or also include human interventions (monitoring, operation, support),

- Identify precisely the metrics that will validate the experience and possibly stop it instantly in the event of a critical impact,

- Communicate, prevent the organization of the existence of the experiment – to avoid escalation in the event of a critical incident

- Perform the experiment

- Analyze the results, put in place any necessary action plans

- Expand the scope for the next experiment.

However, doing a test once will reassure you about the resilience of your system. Still, with permanent changes, the only way to sleep well at night is to automate the experience so that it is carried out continuously in order to follow the evolution of the system.

Example of experimentation

- Set up Chaos Monkey – simulate failures in a real environment and verify that the computer system continues to function.

The experiment consists of regularly choosing instances randomly in the production environment and deliberately decommissioning them. By repeatedly “killing” instances at random, we ensure that we have correctly anticipated the occurrence of this type of incident by setting up an architecture that is sufficiently redundant for a server failure to have no impact on the service provided…

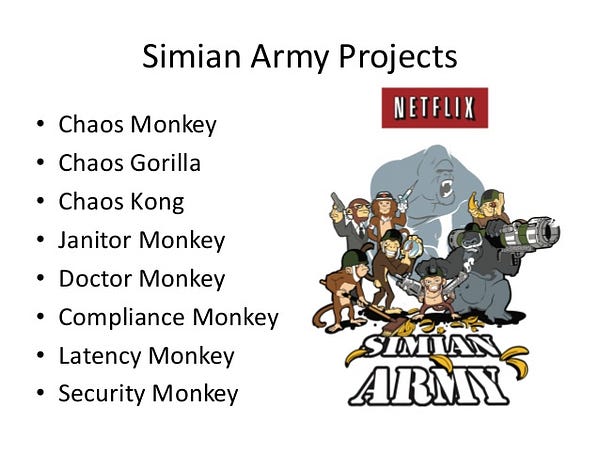

Set up by Netflix in early 2011, they joined the Simian Army which present other types of interruption:

– Chaos Kong (which brings down a complete Amazon availability zone),

– Latency Monkey (which allows testing tolerance for loss performance of an external component),

– Security Monkey (which dismisses all instances that present vulnerabilities), etc.

- Set up a Gameday: to test the resilience of the organization and train it to react in the event of incidents, Jesse Robbins, ex- “Master of Disaster” at Amazon, set up the concept of Gameday which consists of simulate failures to test the teams’ ability to react and return to a nominal situation.

- Set up a Days of Chaos, a variation of GameDay by OUI.sncf for all IT teams and aimed at training in the detection, diagnosis, and resolution of production incidents.

- Set up Disaster Recovery Testing on a recurring basis.

To know more

I recommend this free ebook from O’Reilly: Chaos Engineering, Building Confidence in System Behavior through Experiments